Detecting Node Event Loop Blockers

7 minute read

Node.js is a fantastic piece of technology, and we use it as the foundation for our application servers.

There is, however, one big caveat when working with Node: our application code runs (for the most part) in a single thread (the Event Loop thread). Running in a single thread is fine when the application spends most of its time doing asynchronous I/O since each request consumes only a small amount of CPU. However, when your requests perform CPU-intensive computation, all concurrent requests will be blocked waiting for it to complete!

As the Ashby backend grew in size, we ran into some CPU-intensive code paths, either due to non-optimized code that didn't scale very well or the lack of result set limits (which could cause an endpoint to return an arbitrarily large amount of data).

Join us as we take you through our journey to identify and eliminate requests that block with the help of community NPM packages and our favorite monitoring tool, Datadog.

The Node Event Loop: a Primer

This section is a brief introduction to the Node Event Loop, meant only to provide the necessary context for the rest of this post. If you want to dive deeper into this topic, we recommend Node's excellent documentation on the topic.

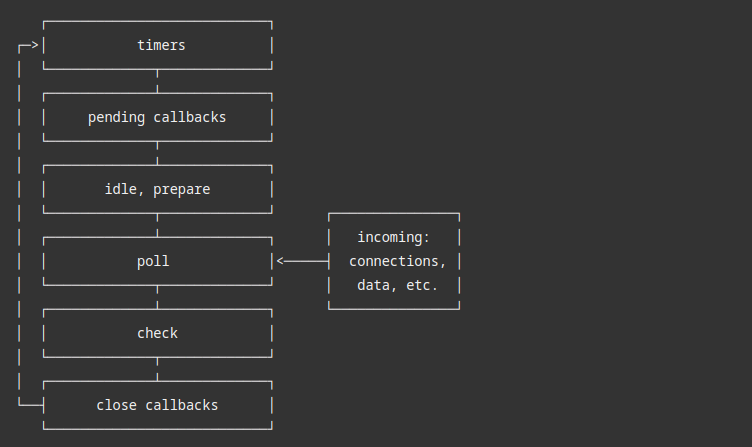

At a very high level, the Event Loop consists of a single thread running in a continuous loop, going through multiple phases, as described below:

Each phase contains a callback queue of all callbacks that need to be processed in that phase. These include various tasks, such as processing timers (scheduled by setTimeout and setInterval), processing I/O events, and executing I/O callbacks (including the code that handles incoming HTTP requests).

All callbacks in the Event Loop are processed sequentially. Suppose a specific callback performs a CPU-intensive operation without yielding the Event Loop. In that case, all other callbacks will remain in the queue and not be processed until the Event Loop frees. We call these "Event Loop Blockers."

Detecting Event Loop Blockers

When we set out to look for solutions to detect Event Loop Blockers, we found two great libraries in npmjs.com: blocked and blocked-at (in both cases, we used version 1.2.0 with Node 16). Both provide a similar interface: a threshold value and a callback that gets invoked when the Event Loop gets blocked for longer than the threshold.

One critical difference between the two, which stems from the fact that their implementations are radically different, is that blocked-at provides the callback with the stack trace for the Event Loop Blocker's entry point, whereas blocked only provides the Event Loop Blocker's duration. This extra information came at a cost: the performance hit of using blocked-at is significant, as indicated in their README:

It's recommended to detect blocking exists with something without the performance overhead and use

blocked-atin testing environment to pinpoint where the slowdown happens. Rule of thumb is you should not be running this in production unless desperate.

Let's look at how each of these libraries work and why the cost of capturing the stack trace is so high.

blocked v1.2.0

The mechanism behind blocked is ingeniously simple (the whole library comes in at just 518 bytes). When installed, it registers a timer (using

setInterval) to keep track of the time elapsed between invocations. The added delay between invocations can be a good indicator of an Event Loop Blocker since concurrent operations that block the Event Loop may delay timers from being fired. On every execution of the timer, if the drift between the desired and actual invocation times is higher than the configured threshold, the callback is invoked.

Since Event Loop Blocker detection happens in a different context from the running application (within the timer), it cannot gather any information about the source of the Event Loop Blocker. On the other hand, the work on each iteration is so fast that its overhead is minimal.

blocked-at v1.2.0

The blocked-at library uses Async Hooks to capture the additional

information about the stack trace. Node recently introduced the Async Hooks API to track asynchronous resources (such as Promises) within a Node process. A developer can register callbacks on asynchronous resource creation (init), when its callback is invoked (before), and when its callback finishes (after).

The init hook is called within the context where the resource is created, so we can capture the current stack trace. blocked-at does precisely that and stores the trace in a global map, indexed by the current triggerAsyncId (the mechanism Async Hooks use to identify asynchronous resources). Then, once the resource's callback is ready to run, the before hook is invoked, capturing the invocation's timestamp (and once again stored in the global

map where the trace is already stored). Once the callback finishes, the after hook is invoked, and the current timestamp is compared against the previously stored timestamp from the before invocation. If the difference is greater than the configured threshold, the callback is called, passing along the stack trace captured in init.

This approach, while providing valuable information, is too slow to be feasible, due to the following reasons:

- Async Hooks run on all asynchronous resources and may cause significant overhead. Recent versions of Node have improved this overhead considerably (we are on Node 16, so this isn't much of an issue).

- We can only capture the stack trace in the

inithook, so before we know whether we need to invoke the callback, we waste a considerable amount of resources. Capturing a stack trace is a relatively expensive operation, and our code will discard it most of the time (when the Event Loop is not blocked).

Our First Attempt

In our first attempt at finding our Event Loop Blockers, we wanted to get as much information as possible, so we started by measuring the performance impact of blocked-at.

After deploying it to our staging environment, we noticed that the response times for our most common GraphQL queries were a few orders of magnitude slower. Our test traffic was enough to consume all the available CPU resources in our staging instances! We also realized that the provided stack trace information wasn't particularly useful. In most cases, it pointed to the Apollo Field Resolver, which is responsible for invoking our code, instead of pointing to the code itself.

With this in mind, we circled back and replaced blocked-at with blocked. Even without the stack trace, we would at least be able to identify when the Event Loop was blocked and, with some manual investigation, figure out where the Event Loop Blocker occurred in our code.

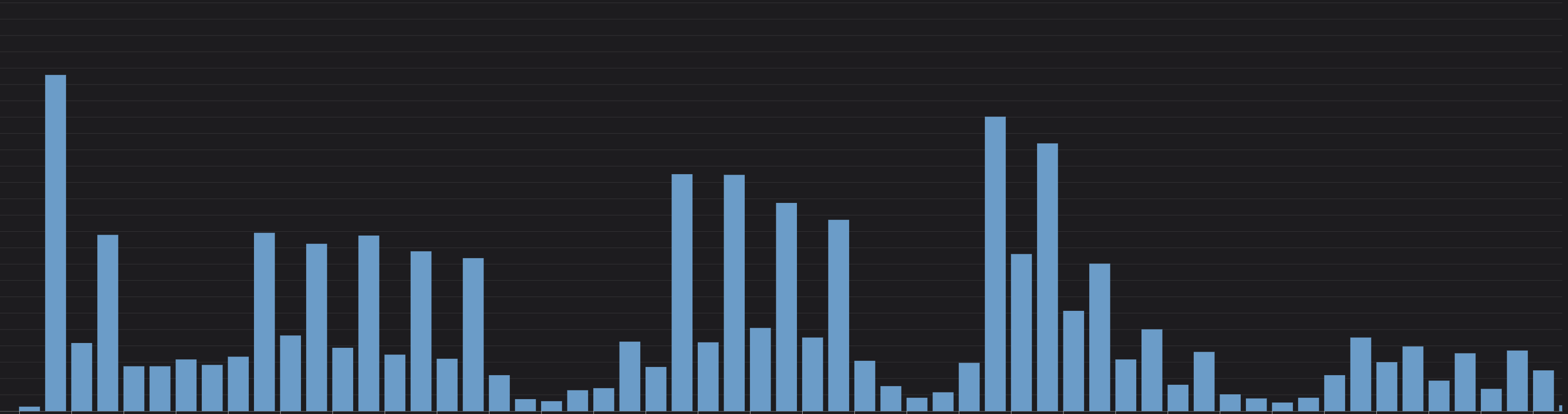

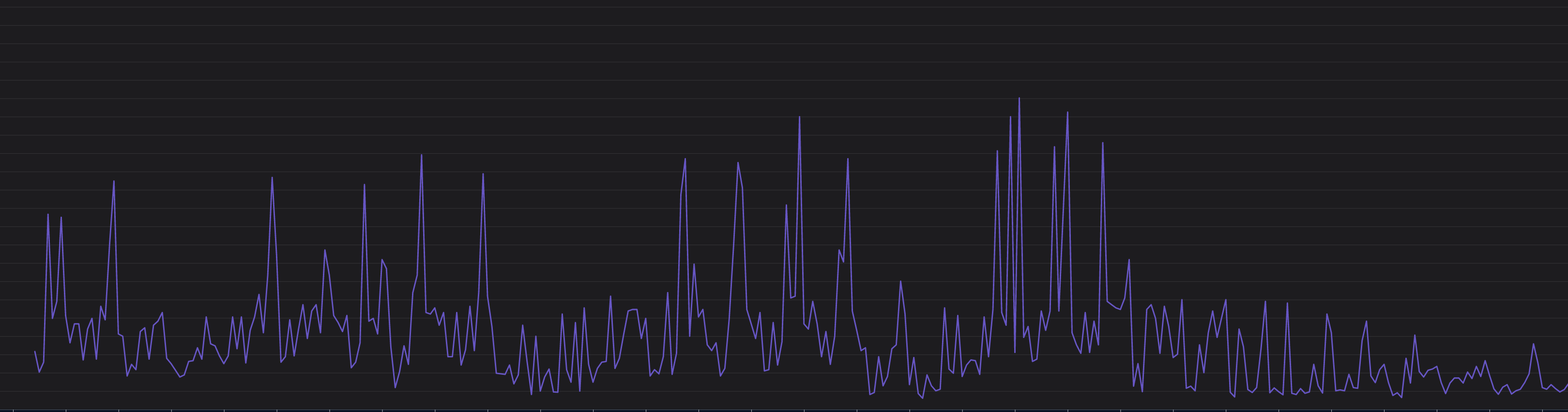

With blocked successfully deployed in production, with minimal impact to performance or resource usage (we noticed approximately 0.5% increased resource usage with the timer running every 100ms), we were, as expected, able to identify critical code paths that were contributing to the blocking issues. However, each event took nearly 5 minutes to investigate, and with hundreds of blocking events per hour, this quickly proved unsustainable.

Datadog Integration

We use multiple Datadog products in our monitoring stack, particularly Datadog APM and Datadog Log Management. When using these two products, we can connect Logs and Traces and view all log lines generated in the context of a specific HTTP Request Trace in a single interface. In our short-lived experiment with blocked-at, we noticed that the logs correctly connected with the trace for the request that generated them.

After some investigation, we noticed that the Async Hook used by blocked-at allowed us to access the reference to the

"current trace" (as Datadog was also using Async Hooks to propagate its context). What if

instead of capturing the stack trace, we used the Async Hook to surface the blocker in the Datadog Trace?

We started by writing our own Async Hook with heavy inspiration from blocked-at. Our first version did little more

than detect when a blocking event happened:

1const cache = new Map<number, [number, number]>();

2

3function before(asyncId: number) {

4 cache.set(asyncId, process.hrtime());

5}

6

7function after(asyncId: number) {

8 const cached = cache.get(asyncId);

9 if (cached == null) {

10 return;

11 }

12 cache.delete(asyncId);

13

14 const diff = process.hrtime(cached);

15 const diffNs = diff[0] * 1e9 + diff[1];

16 if (diffNs > thresholdNs) {

17 const time = diffNs / 1e6;

18 logger.warn({

19 label: "EventLoopMonitor",

20 message: `Event loop was blocked for ${time}ms`,

21 metadata: {

22 time,

23 },

24 });

25 }

26}

27

28const asyncHook = createHook({ before, after });

29

30asyncHook.enable();In the before hook, we are capturing the current timestamp (side note: we are using the deprecated process.hrtime() method, instead of process.hrtime.bigint(), as the latter has a considerable performance impact in Node 16), and storing it in the cache, indexed by the current Execution Async ID (like blocked-at does).

Then, in the after hook, we fetch the initial timestamp from the cache and compare it against the current timestamp. If the diff exceeds the configured threshold, we log a warning message (like in blocked-at, which is associated with the current Datadog Trace).

The final piece of the puzzle is surfacing the block in the trace itself, which we achieved by adding the following to the code path where the block is detected:

1const span = requestTracer.startSpan("EventLoopBlock", {

2 childOf: requestTracer.scope().active() ?? undefined,

3 startTime: new Date().getTime() - time,

4 tags: {

5 [tags.SERVICE_NAME]: "ashby-backend-event-loop-monitor",

6 },

7});

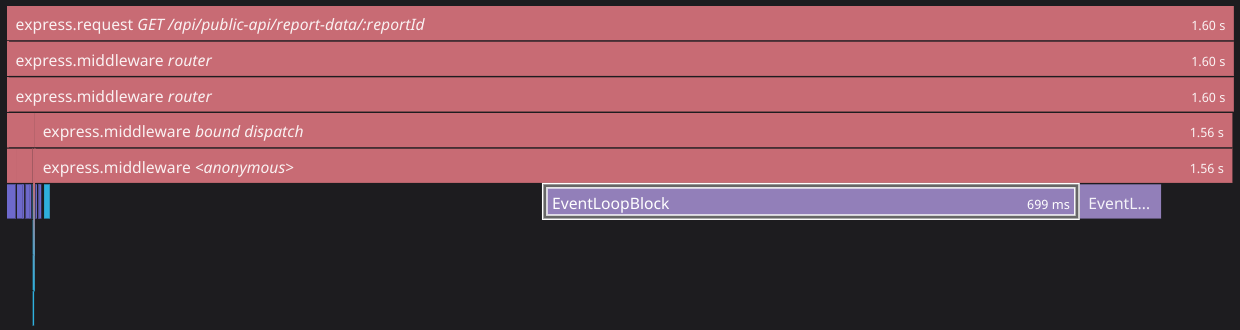

8span.finish();Here, we manually create a trace span named EventLoopBlock, which we create as a child of the "current trace." We also set the start time to be the current timestamp minus the total block time (the use of new Date().getTime() means we lose sub-millisecond precision, but this is acceptable for our use case). Doing this causes the span to be

rendered as part of our trace:

This approach meant that the total amount of work done in the "good" case (where no blockers are detected) is much

smaller than in blocked-at: two API calls to fetch the current timestamp, two cache writes, and one cache read. The

performance impact of this approach was much more acceptable to us, ranging between 1% to 2% additional CPU usage (and a comparable increase in total response time) when deployed in production.

Results

Since we deployed our new Event Loop Monitor, we have been able to identify most of the sources of Event Loop Blocking, and have both reduced our total number of Blocker events by and p99 latency of said events by up to 80%!